Step aside GPT...

Multimodal AI just walked in.

The world of AI is ever-changing, with new technologies becoming relics in the blink of an eye.

Although it’s barely been a year since the launch of ChatGPT, the groundbreaking AI ChatBot from OpenAI, it feels like a different era. Since its launch, it has disrupted systems, with people finding new ways to use it every day. ChatGPT’s launch triggered a race between tech giants across the world to develop the next big AI tool that could take the world by storm and also opened the eyes of authorities to the possible dangers of the same.

Very soon, we saw Google bring out their contender, BARD. Following this, we saw a flood of AI tools in almost every sector. Many tools came up, with each tool picking its niche and providing specialized solutions for a problem using Generative AI, the core technology running the show.

Companies started realizing the potential of using Chatbots in different sectors and started actively experimenting. These days, it is becoming increasingly rare to find software/websites that do not use AI to improve their offerings. From AI tools to help you decide what to eat next based on your mood to tools that can create personalised learning plans and flashcards to help you prepare for an interview. Each day, we see new ways to use this technology.

Popular AI tools:

Grammar checkers and rewording tools (Grammarly, Wordtune, ProWritingAid)

Video creation and editing (Descript, Wondershare Filmora, Runway)

Image generation (DALL·E 3, Midjourney, Stable Diffusion)

Voice and music generation (Murf, Splash Pro, AIVA)

Knowledge management and AI grounding (Mem, Notion AI Q&A, Personal AI)

Transcription and meeting assistants (Fireflies, Airgram, Krisp)

While the world is still coping with the ramifications of the new wave of AI tools, it seems a bigger wave might just be riding on its back.

What is GPT? How does it work?

GPT, which stands for Generative Pre-trained Transformer, is a type of large language model and a prominent framework for generative artificial intelligence. They are artificial neural networks that are used in natural language processing tasks. These language models search for the required data and calculate and display the results in a data table or spreadsheet. Some applications can plot the results on a chart or create comprehensive reports.

As per ChatGPT, “GPT, or Generative Pre-trained Transformer, is like a super smart computer program that learns from lots and lots of text. It reads through huge amounts of information to understand how language works. Then, when you give it a prompt or ask it a question, it uses what it learned to generate a response that makes sense based on all that reading. It's like having a really clever friend who can write or talk about almost anything you ask them about, because they've read so much about it before.”

What made ChatGPT stand out from the many AI tools that came before it is its ability to produce high-quality and coherent text. ChatGPT works by attempting to understand your prompt and then spitting out strings of words that it predicts will best answer your question based on the data it was trained on. While that might sound relatively simple, it belies the complexity of what's going on under the hood.

Enter Multimodal AI

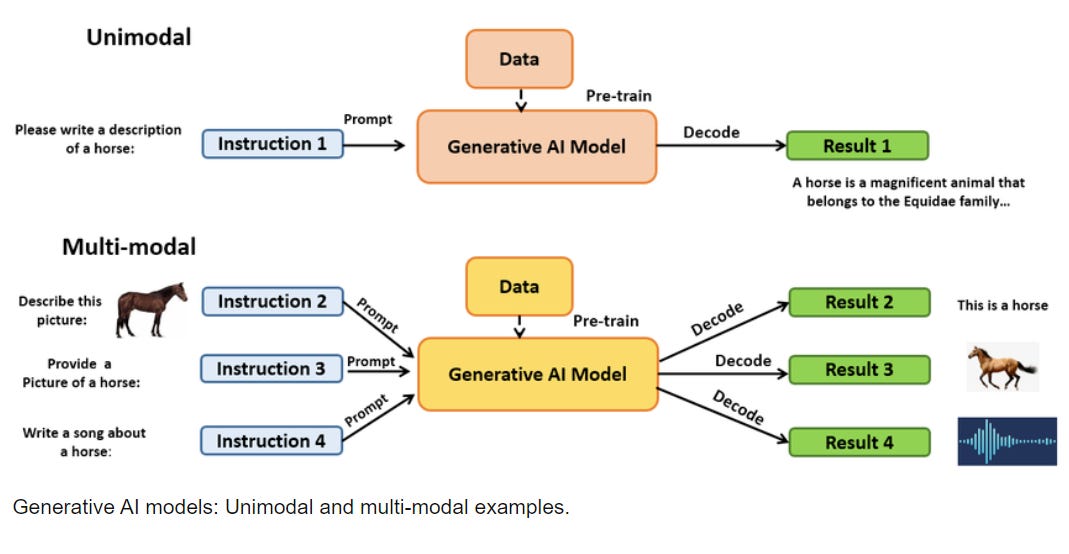

Multimodal AI simply means that the AI model is trained on data that is not just text but can use various inputs such as images, videos, etc. This means you can use multimodal AI tools, not just by typing your query but also by showing a video or image or simply talking to the model. The AI tool will take all the information it receives, analyze it, comprehend the query and spit out the answer it feels is the most relevant.

Why is it a big deal?

This represents a big step forward in our journey towards AGI or Artificial General Intelligence (a machine capable of completely replacing human beings with the ability to make decisions it wasn’t programmed for). Think about it: We humans are also multimodal. We take inputs in all forms and use that data to decide how we should proceed forward.

We have already started seeing some examples of multimodal AI starting to work its way in. ChatGPT-4, the latest version of the ChatGPT, uses a multimodal AI model and gives output in many formats. All of this results in a more cohesive output from the chatbot.

Not far behind, Google also unveiled its multimodal AI model, Gemini, in a YouTube video. The video shows how the AI model can understand context and what is happening on screen. The model is also able to communicate in a very conversational manner. However, the video has received criticism as it was revealed not to be as real-time as shown in the video.

The next step…

All of this is leading to a new wave of gadgets. Using high-speed internet, cloud computing, and multimodal AI, we are seeing a new category of devices starting to pop up.

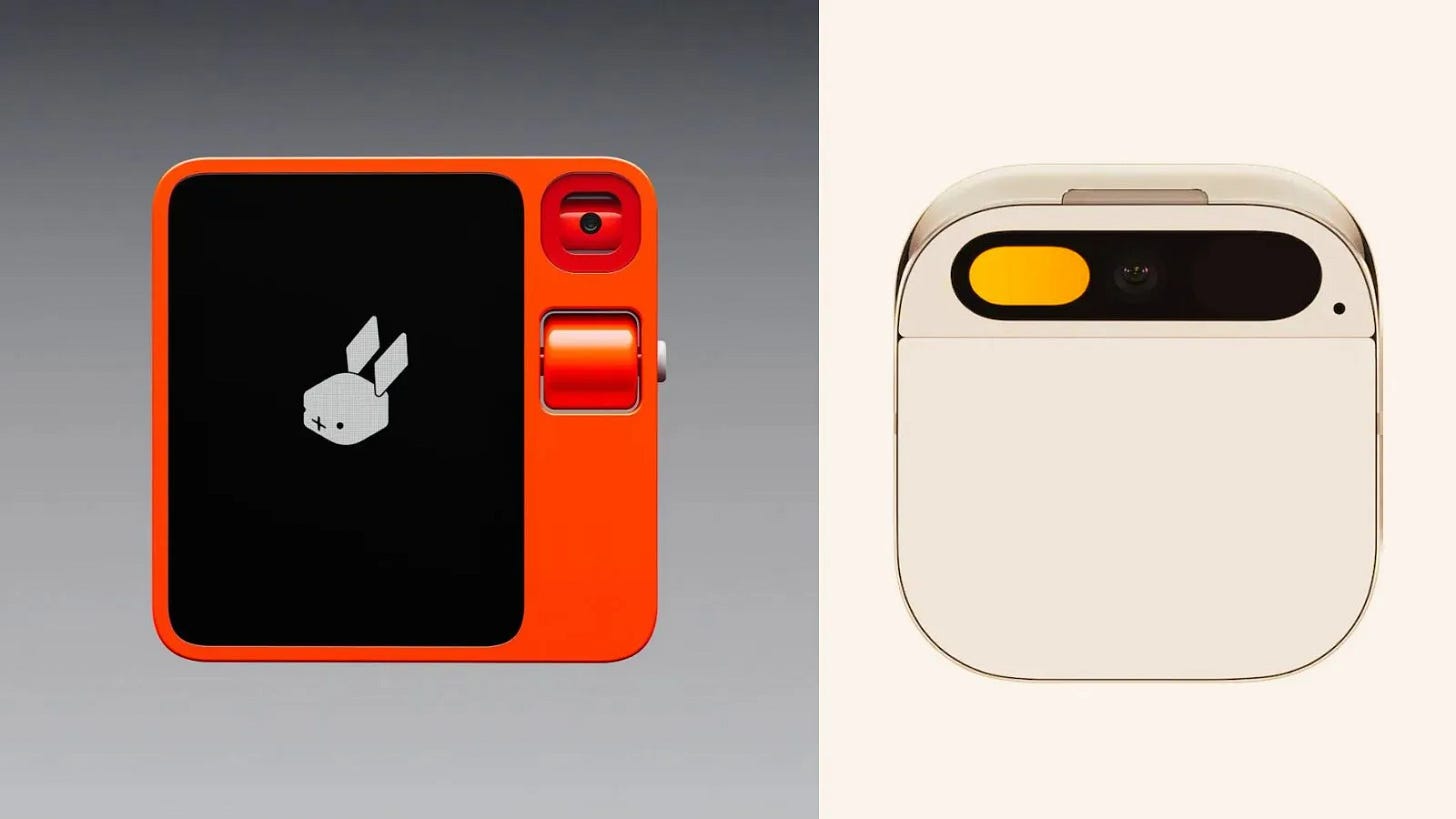

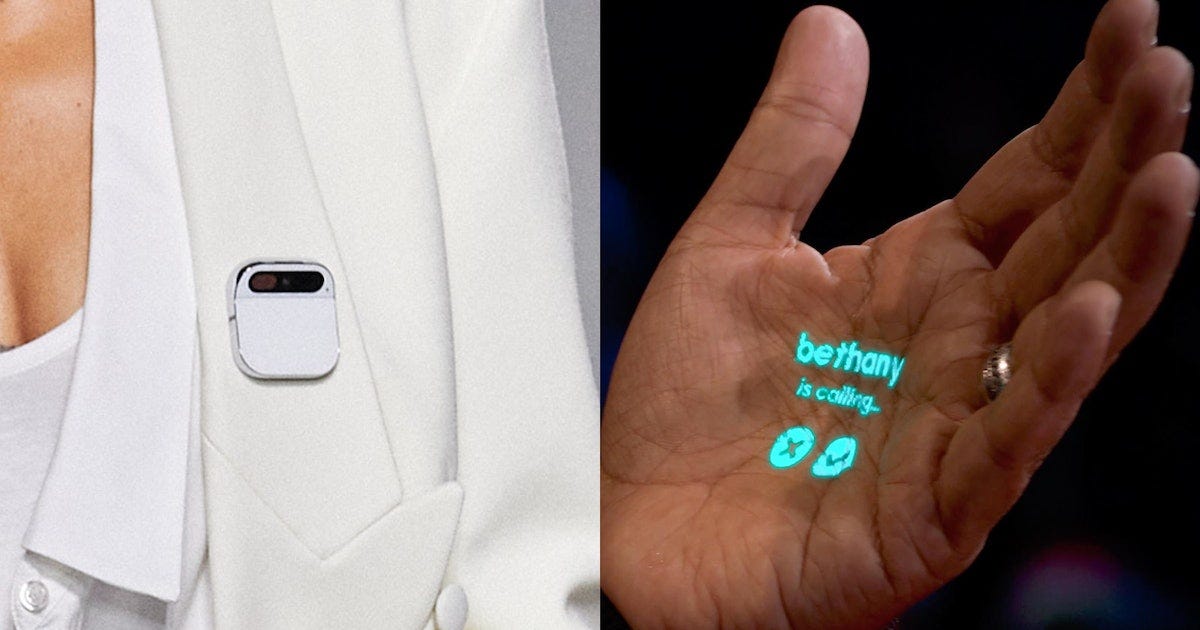

Seeing the opportunity to strike the wearable AI market early, new companies such as Rabbit and Humane have shown early prototypes of their offerings, Rabbit R1 and AI Pin. These devices aim to use multimodal large language models to provide a reimagined experience to the user. Rather than scrolling on your phone endlessly, you would simply point your camera to what you are searching for or speak out your query, and the device will use all its inputs to return the most relevant answer. In a world where awareness of digital well-being is only growing, this seems like the perfect balance for many.

Depending on how well they perform and how well they are received by consumers, this could potentially change how we interact with the internet and the world completely.

We are all living in an exciting era. A front-row seat to an action-packed thriller. How the movie ends… it’s upon us to decide. We just might be at the cusp of the next revolution after smartphones.

A great read

Superb!